The population of the earth has grown, as has global trade. As a result, there are greater numbers of people with varying physical attributes to be considered during product development – be it a set of headphones, the interior of a car, seat on an airplane, or spatial planning of a new facility. Human attributes such as gender, age, body type, and weight are just a few variables that alone create a large and varying range of potential form factors to consider into design requirements such as reach, visibility, fit, and comfort.

Why Practice Human-Centered Product Design?

Developing new product in today’s digital realm without considering how a human will interact with the new product until later in the design cycle can be an expensive oversight. Waiting to test and evaluate the multiple variables of the human experience in the real world on physical prototypes can lead to costly form, fit, and function issues. These can range from unexpected interferences between the operator and product to something as simple as the individual does not fit in the product environment.

The 3DEXPERIENCE CATIA Human Design Solution

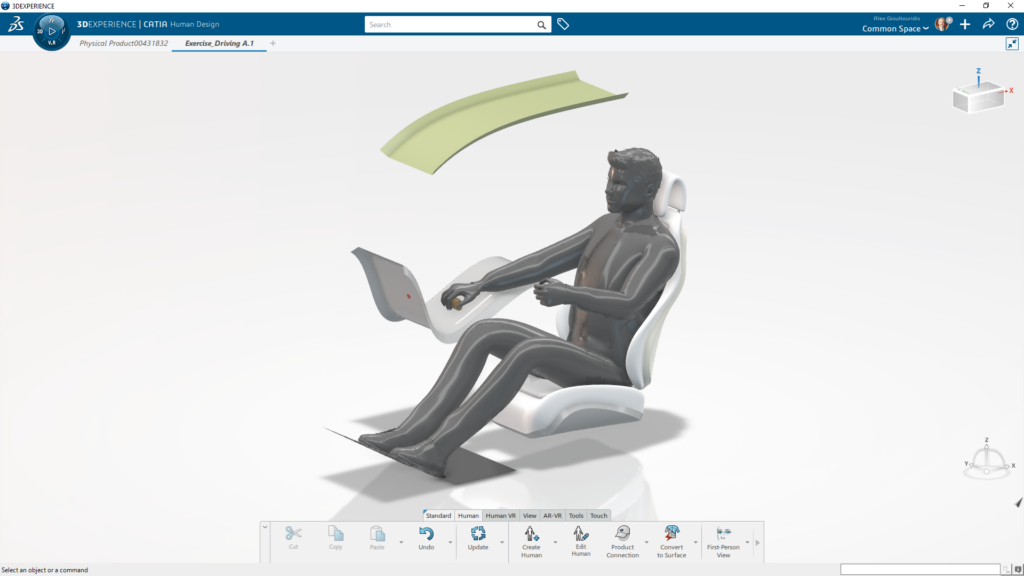

3DEXPERIENCE CATIA provides a set of tools with the Human Design app and Human Animation Studio app extension that provides designers with a virtual 3D human-centered design solution to imagine, design, adapt, experience, and review the physical environment compliance with initial Human Factors criteria.

Product designers can create and animate human avatars with advanced cosmetic customization properties and advanced posturing capabilities using the Human Design and Human Animation Studio apps. Having virtual 3D human avatars present in context of the 3DEXPERIENCE digital design environment, product designers have the ability to perform conceptual human-centered design to enhance the product and environment by providing:

- Improved scale perspective to the product by including a virtual 3D human

- Visualization of how a human will interact with a design/product/environment

- Detection of potential interferences between the virtual human model and the product/environment

- First person view from the virtual human perspective to detect potential vision issues (such as potential blind spots)

- Foundation for creating rich marketing and presentation materials with advanced renderings containing realistic materials and background ambiances

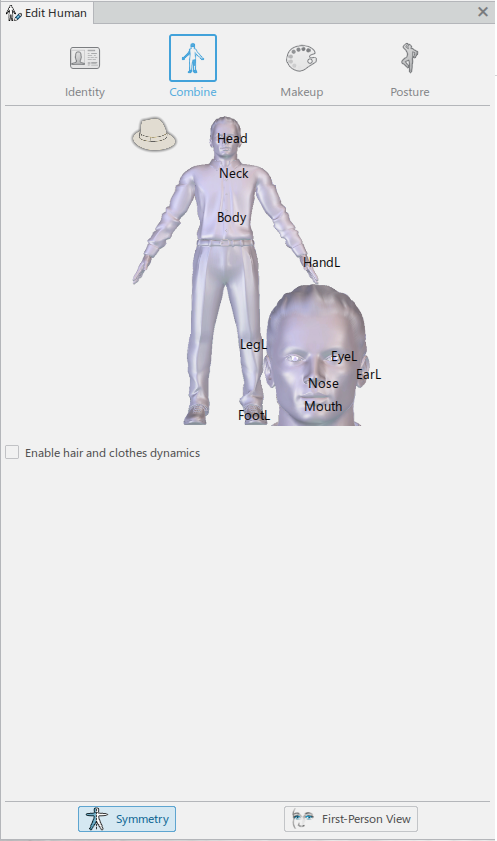

The Human Design app and supporting Human Animation Studio app extension for animated simulations deliver an easy-to-use solution where the power of Inverse Kinematics (IK) is already defined within the virtual human model, making it simple to manipulate the virtual human by simply selecting a body part (e.g. hand or arm) and using the robot (aka Compass for V5 users) to position.

And what’s more, the Human Design app is included with many 3DEXPERIENCE engineering based roles. Perhaps you already have the app and don’t know it – to find out if you do, select the 3DEXPERIENCE Compass and then select your active Role(s) to see a list of all the included apps.

Before continuing, it is important to note that the virtual human avatars created from the Human Design app are not anthropometrically accurate and should be used for preliminary work. Where a more accurate analytical ergonomic solution is required, the 3DEXPERIENCE platform offerings include dedicated Ergonomics roles or RAMSIS for 3DEXPERIENCE extensions that enable:

- Design based on anthropometric requirements

- Increased accuracy for Vision, Reach, Comfort, Force, and Safety

- Using anthropometrically accurate models and posture prediction

That said, 3DEXPERIENCE 2022x introduced the VR Incarnation function that provides the ability to define improved accuracy of the virtual human avatar environment. This is accomplished by users synchronizing their real-world features such as height and reach to their virtual human avatar in the 3DEXPERIENCE environment – more details on this topic below.

How to Use the 3DEXPERIENCE Human Design App

3DEXPERIENCE Manikin Types and Appearances

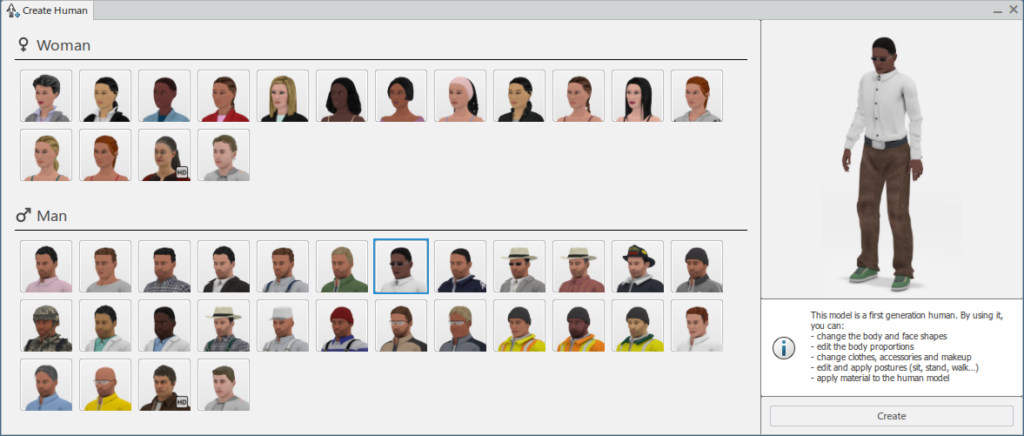

The 3DEXPERIENCE Human Design app provides three types of manikins – also known as Human Templates – to create human avatars. Each of the Human Templates offer their own unique purpose and features in the 3DEXPERIENCE virtual environment:

- First Generation Model – Useful for conceptual design where the importance is placed on the appearance of the manikin for presentations, such as applying textures and clothing.

- Generative Human – Although not anthropometrically accurate, the Generative Human template provides users with the ability to sculpt the body and shape of the manikin to provide a more realistic representation of body types, ages, and gender.

- HD Model – Useful for presentations and marketing material where the emphasis is on a high-resolution model for realistic and rendering scenarios.

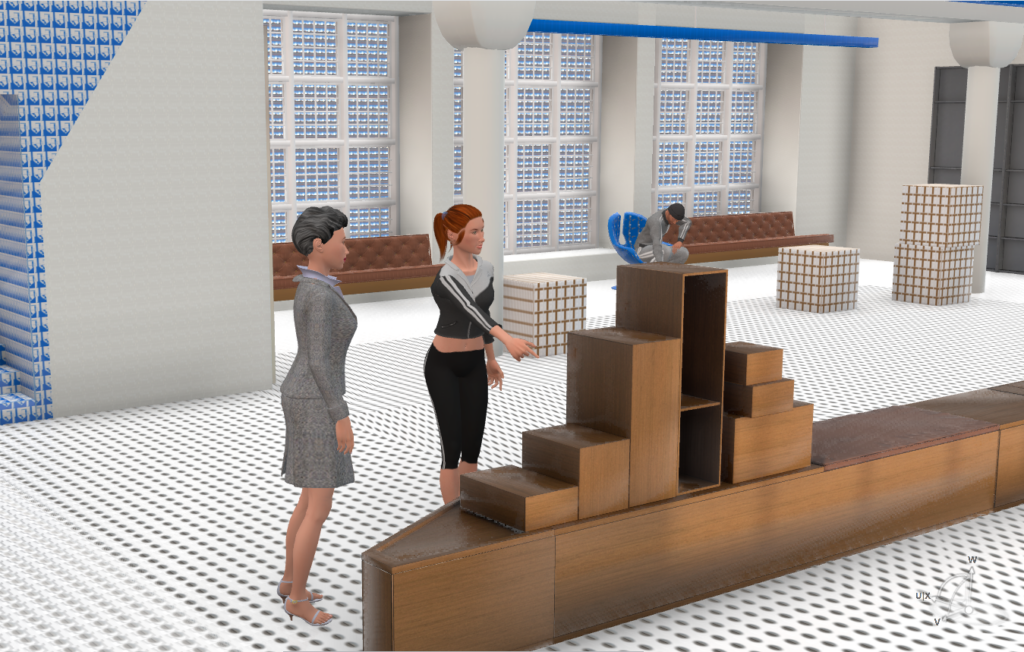

Below is an image that provides representations of a 3DEXPERIENCE virtual design environment contains representations of each of the three Human Templates.

Below is a tabular breakdown of what type of actions can be performed with each Human Template type:

Human Design – Instantiate & Posture

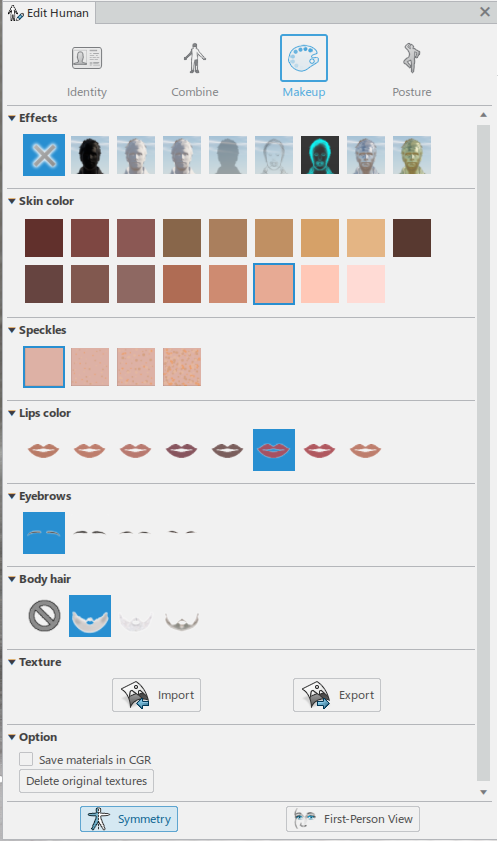

Introducing a virtual human to your design environment provides an enhanced level of detail to the design environment, where the action of adding the virtual human is a simple pick and place action. The instantiated Human Template is represented in the Tree as its own unique 3DEXPERIENCE object that can be configured, saved, and re-used. Depending on the selected Human Template, the properties of the virtual human avatar can be configured to the desired result through an easy-to-use interface. Initially users define the body type, proportions, and other visual appearances. Below is a list of the different properties that can be configured:

- Body type and face shape

- Body proportions

- Clothes, accessories, and makeup

- Posture

- Materials for special effects

Below is an image that provides representations of a 3DEXPERIENCE virtual design environment contains representations of each of the three Human Templates.

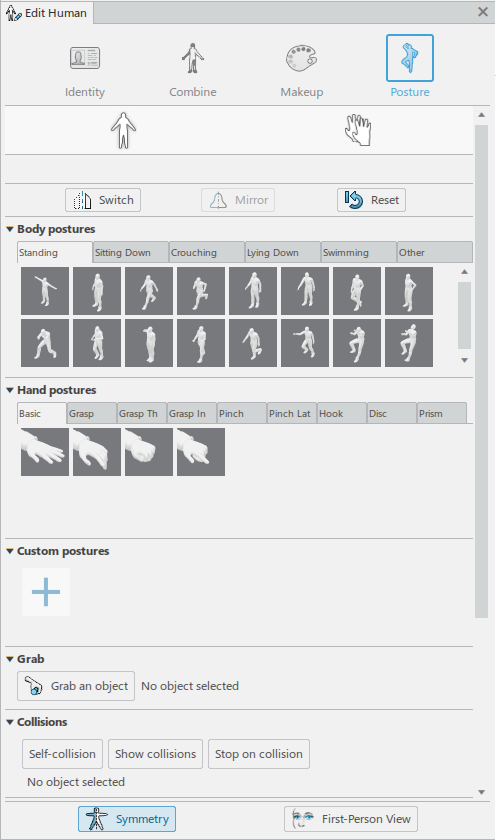

Human Design – Posturing in the Environment

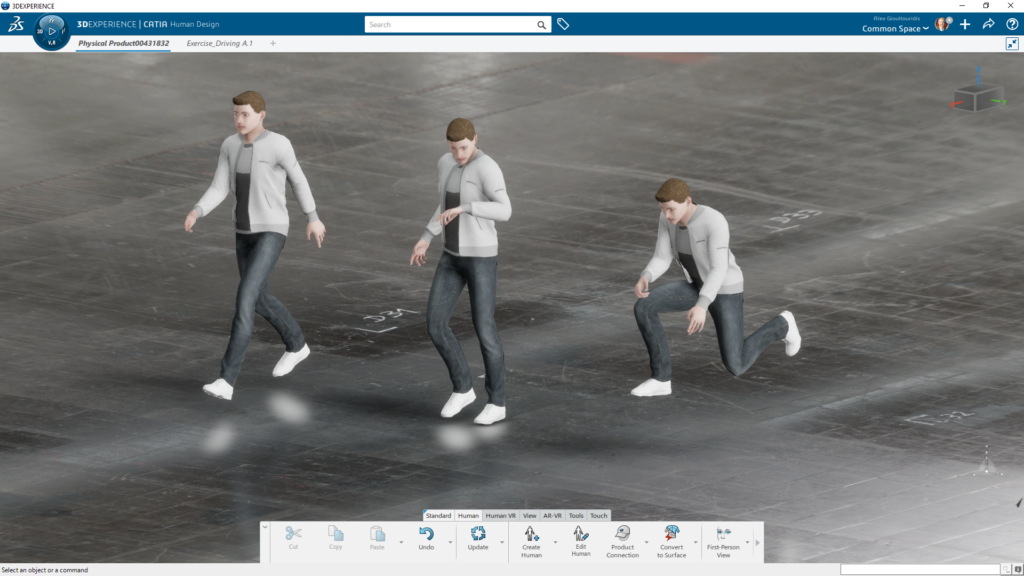

Once the virtual human avatar is configured and placed in the digital environment, designers can easily set the manikin posture using a library of predefined postures. The library of predefined postures includes numerous common postural categories including Standing (e.g. jogging), Sitting (e.g. driving), and others including Crouching. The predefined posturing is also extended to common Hand Postures to manage the numerous grip positions of the human hand. For custom postural scenarios, designers can save and recall user-defined postures.

Alternatively, the Human Design app provides a manual method of defining the posture to create a new posture to add to the existing library and/or fine tune an existing posture to meet the requirements in the design environment. In addition to posturing the manikin, 3DEXPERIENCE provides the ability to defining predictive posturing for grip where designers can configure the hands of the manikin to interact with the digital models in their environment (e.g. gripping a steering wheel).

WATCH VIDEO: Human Design app posture and grip

For another way of perceiving the digital design environment, designers can also switch from a third-person view to a first-person view through the eyes of the human avatar.

For scenarios where communication of the virtual human in context of the design environment is required with external sources, 3DEXPERIENCE provides the ability to create a surface model of the virtual human in its current posture. The surface geometry of the manikin can then be included when sharing data in a neutral file format such as 3DXML or STEP.

The Addition of Virtual Reality (VR) and Augmented Reality (AR)

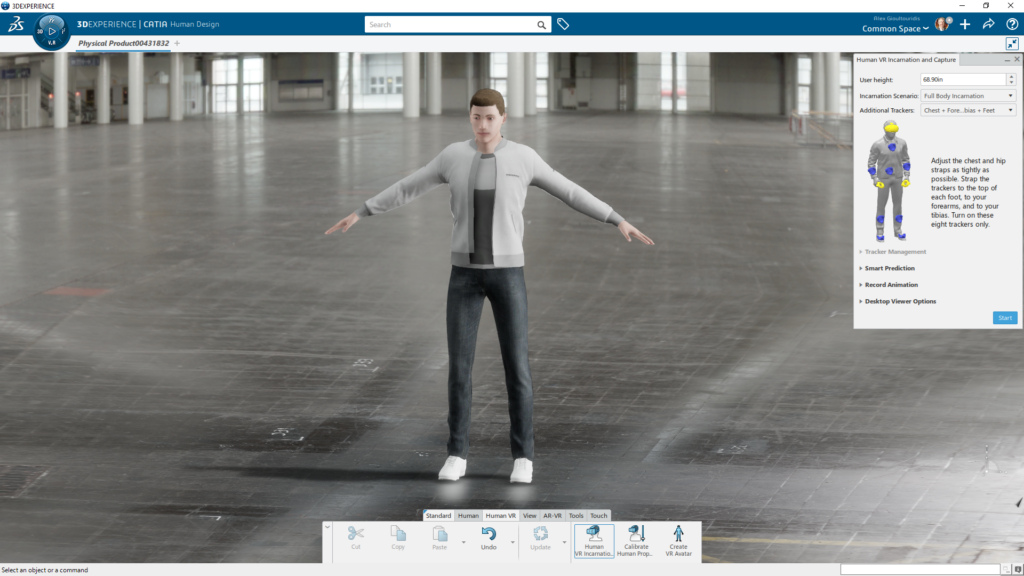

Create a life-like experience to evaluate the product or product environment by immersing yourself in the 3DEXPERIENCE digital environment using Virtual Reality (VR) technology. The VR experience is initiated by putting on a HMD (head-mounted display) and VR tracking sensors to selected body parts of the individual to be immersed into the digital product environment. Depending on the available VR equipment, users can calibrate Height, Hand Length, Forearm Length, Arm Length, and Shoulder Length of the real human to the 3DEXPERIENCE virtual human avatar to define improved realism and accuracy while interacting in the virtual environment.

Following a few quick calibration steps, the sensors on the person wearing the VR equipment is now synchronized with the 3DEXPERIENCE digital environment. Introduced with 3DEXPERIENCE 2022x, Human VR Incarnation enables the motions of the individual wearing the VR equipment to be mimicked by the virtual human avatar. The individual can walk through and explore the digital environment and interact with the product to gain a more realistic perspective as the product consumer. Estimating the visibility or reachability of controls, examining the feasibility to service a piece of equipment, and digital training are just a few examples of the benefits of using VR in your 3DEXPERIENCE environment.

It is also now possible to include the virtual human avatar in an existing 3DEXPERIENCE mechanism (Note: prerequisite app of CATIA Mechanical Systems Design is required). Connect the Manikin to your digital product to explore how the manikin responds to the boundary conditions of the defined product mechanism. This is accomplished by attaching specific body parts of the human avatar to the selected 3DProduct of the mechanism. Upon launching the Mechanism Player, the user can manually move the mechanism and evaluate how the human avatar moves along with the mechanism of the 3D product. This is helpful with understanding preliminary range of motion and interference between the virtual human and product in the digital realm, before expensive physical prototypes are created.

Animated Simulations of Virtual Humans

Lastly, if desired, users can create animations of the virtual human in their digital environment using the Human Animation Studio app, an extension of the Human Design app. The animations are created using Keys methodology, where the virtual human posture and position are recorded at specified time intervals and 3DEXPERIENCE then animates the virtual human moving between each posture Key taken. This can be helpful as a visual reference for presentations to help stakeholders understand how people will interact with the product or product environment.

Questions?

If you have any questions or would like to learn more about CATIA Human Design on the 3DEXPERIENCE platform, please contact us at (954) 442-5400 or submit an online inquiry.